I am pleased to present my latest project: the fully automated deployment of LibreChat on AWS EC2, an open-source solution available on GitLab. This project showcases my expertise in DevOps and cloud engineering, leveraging Terraform for infrastructure as code (IaC), a Bash User-Data script, a GitLab CI/CD pipeline, and centralized management via AWS Systems Manager (SSM). Result: a LibreChat instance up and running in under 6 minutes, with optimized costs, enhanced security, and maximum accessibility.

This project goes beyond a simple technical deployment: it embodies a vision of advanced automation, designed for both experts and beginners. Here is how I designed this solution.

1. An Optimized and Reproducible Cloud Architecture

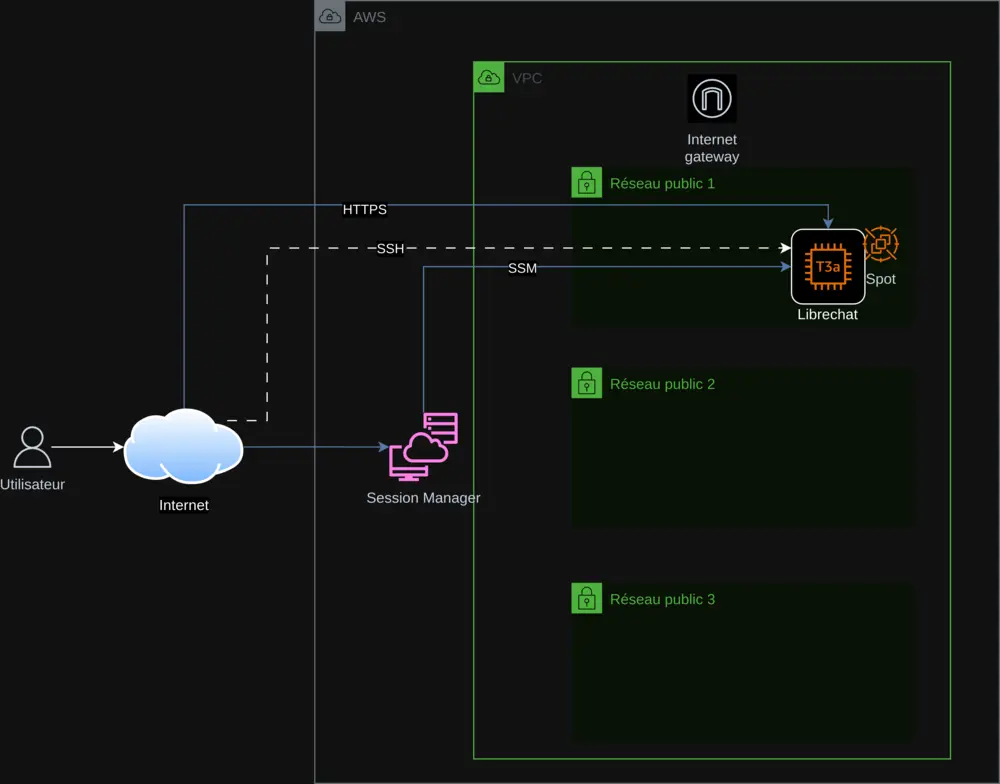

The infrastructure is built with Terraform, following Infrastructure as Code principles for seamless reproducibility and scalability. The main components include:

- AWS VPC: an isolated virtual network to secure the infrastructure.

- Public subnets: three subnets to ensure high availability.

- EC2 Instance (t3a.small): hosts LibreChat, with a choice between Spot Instances or On-Demand.

- Internet Gateway: enables secure connectivity over HTTPS.

- AWS SSM: centralizes API key management and deployment monitoring.

Deployment on EC2 is automated via a Bash User-Data script that:

- Installs dependencies (Docker, Git, Node.js) and updates the system.

- Clones LibreChat (version 0.7.7) and configures files

.envandlibrechat.yaml. - Retrieves API keys from SSM securely.

- Configures Nginx with a self-signed SSL certificate for HTTPS.

This script, integrated via Terraform, includes error handling (set -e, trap 'error_handler' ERR) and logging into /var/log/user-data.log, ensuring a reliable and traceable process.

2. Security and Centralized API Key Management

Security is a priority. API keys (OpenAI, Mistral AI, Anthropic, Google) are stored in AWS SSM Parameter Store, retrieved dynamically, and never hard-coded. Measures include:

- HTTPS enforced: self-signed certificate with redirect from 80 to 443.

- Error handling: script halts on failure, with status updates in SSM.

- AWS Session Manager: access to the instance without SSH for improved security.

This approach guarantees secure management and real-time supervision.

3. Cost Optimization with Spot Instances

To reduce costs, I integrated AWS Spot Instances, leveraging unused capacity. A Terraform variable spot_enabled allows choosing between Spot and On-Demand, making the project accessible to everyone. A Python script, check_spot.py, automatically adjusts prices in variables.tf for continuous optimization.

4. A Complete CI/CD Pipeline with GitLab

A GitLab CI/CD pipeline (defined in gitlab-ci.yml) orchestrates the deployment:

- Terraform: plans and applies infrastructure changes.

- Instance management: starts, stops, and checks status via SSM.

- API keys: adds or removes keys securely.

- Registrations: controls access to LibreChat.

The script export.sh simplifies commands, whether run locally or via CI/CD, providing a smooth, professional experience.

5. Global Accessibility through Internationalization

To reach a global audience, the README is translated into six languages (English, German, Spanish, Japanese, Korean, Chinese) thanks to my AI translation script. This makes the project inclusive and suitable for all users.

6. Technical Challenges Successfully Addressed

Adapting LibreChat 0.7.6 required precise adjustments:

- Mistral support: updating

librechat.yamlformistral-large-latest. - Nginx: fixing SSL directives for HTTP/2.

- Spot/On-Demand: flexible handling via Terraform.

These challenges were overcome with rigorous testing and detailed documentation.

7. Why This Project Stands Out

This project stands out for:

- Complete automation: everything is scripted, from infra to installation.

- Optimization: reduced costs and improved performance.

- Security: centralized management and HTTPS by default.

- Accessibility: multilingual documentation and simple options.

It reflects my skills in AWS Architecture, DevOps, cloud, and scripting, as well as my passion for open source.

Discover and Contribute!

Explore this project on GitLab, try it out, and share your feedback. Whether you’re an expert or a beginner, this project is for you. Contribute to help it grow!

Contact: contact@jls42.org

About me: I am Julien LS, passionate about AWS cloud architecture, DevOps, AI, and automation. This project is part of my work like BabelFish AI. Find me on GitLab.

This document was translated from the French (fr) version into English (en) using the gpt-5-mini model. For more information on the translation process, see https://gitlab.com/jls42/ai-powered-markdown-translator